How companies use LLM when adopting AI — ValueXI Case Study

When ChatGPT fell short, it highlighted the importance of strategic implementation. Companies must leverage LLMs effectively, focusing on clear goals and integration into workflows for successful AI adoption.

February 17, 2025

Within a year, large language models (LLMs) have become a hot topic in the business realm. These models are now used to interact with customers, create content, and even write code. Businesses that never considered AI before are now seeing new opportunities with LLMs.

But with these opportunities come risks. LLMs are often seen as a "black box": they can change context, give irrelevant answers, and ultimately disrupt processes. The challenges of LLMs only add to the complexities typical of any AI project.

Unlocking the Potential of LLM: A Case Study in AI Integration

Businesses are intrigued by the potential of Large Language Models (LLM), but monetizing these powerful tools is not straightforward. To embark on an engaging project, such as developing an AI to optimize specific processes, significant intellectual and financial investments are required.

Achieving unique results demands unique data—data that your business has accumulated. You need Data Science experts and prompt engineers. These professionals can prepare the data, ensure privacy, and prevent the model from generating errors. Additionally, you need people who can seamlessly integrate the new tool into your existing infrastructure.

Under these circumstances, it might seem easier to entrust LLM with tasks like text correction rather than something with higher business value. However, the emergence of ambitious projects that fully leverage LLM is entirely possible.

We were approached by a client—a Forbes 2000 manufacturing company—entering the foreign market with a new gaming equipment brand. The company needed to analyze the effectiveness of its customer support. The quality of this support was crucial for the new brand's solid establishment in the market. These were real dialogues with open-ended questions, requiring AI for accurate evaluation.

The case we want to share features all the “favorite” challenges of AI projects:

- Structuring and processing real written speech to create a custom solution

- Using LLM in an unusual way as an auxiliary tool for initial data labeling required before AI training

- Privacy expectations: customer data is GDPR protected and cannot be transferred to any foreign service, while the information about the company’s new devices must not leak as well

- Extensive prompt engineering

- Further integration of the resulting model into corporate software

- The ambitious goal of completing all this in just a month

Spoiler alert: we solved the problem using a hybrid approach. We used the ValueXI platform to anonymize dialogues and securely access ChatGPT. The LLM was then employed for data labeling, followed by ValueXI again for verifying this annotation and developing a custom AI. As a result, the client identified weak points in their support system, updated the FAQ on their website, and plans to develop a chatbot tailored to frequently asked questions.

Understanding and Applying Large Language Models in Business

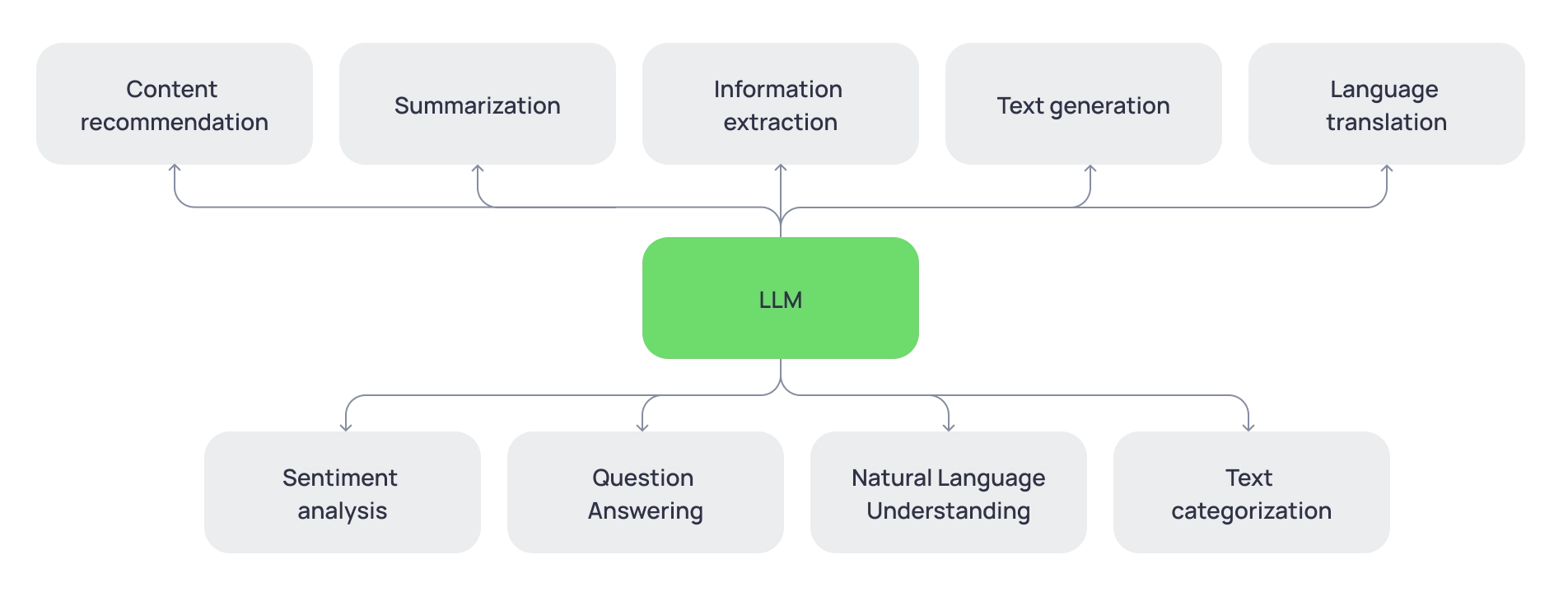

A Large Language Model (LLM) is a massive neural network that processes natural language and helps solve various data and language-related tasks. These models can be public or private, complex or simpler, and can be used for chat interactions via a browser, or for exchanging queries through an API (although not all models offer this feature). Additionally, there are networks based on Open Source technologies (such as Claude, LLaMA) and proprietary ones with inaccessible code (like ChatGPT).

If your task involves natural language processing, LLMs can be incredibly useful for content creation and SEO optimization, recommendation systems, and responding to user questions in natural language.

Recently, large language models have started to replace traditional search tools: Perplexity is vying for the positions previously held by Google, and for encyclopedic information, it's easier to turn to ChatGPT instead of Wikipedia. Moreover, more complex tasks are now assigned to neural networks: information labeling, trend prediction, coding, and writing simple scripts. However, solving complex tasks with LLMs requires expertise in both programming and machine learning.

Challenges in AI Development and Using LLM “As Is”

Our client needed to develop a tool that provided analytical information based on text dialogues between technical support and customers.

Goals:

- Use LLM to label the client’s data (almost any neural network is precisely designed to understand dialogues and extract the essence) – essentially converting natural speech into a structured table.

- Utilize the annotated data to develop an AI on the ValueXI platform (designed for targeted data work).

ValueXI is a platform solution for developing AI models trained on business data. The tool reduces development costs x3 and provides control over the process from data upload to model readiness.

On this project, this tool initially helped to mitigate LLM shortcomings and then used the classification results to quickly create a custom AI that analyzes technical support performance. All interactions with ChatGPT were conducted through the API (from the ValueXI interface).

But data classification is literally one of the primary uses of LLM. Why create AI on an additional plug-and-play platform?

Privacy and Personal Data Issues

Once you give personal data in a chat window or via an API, you cannot retrieve it. Although enterprise versions of large language models guarantee that your data will not be taken in its raw form, doubts remain. After all, data is “the new oil.” Additionally, the client's personal data may be protected by GDPR, which directly prohibits uploading it to foreign platforms.

Lack of Control

One of the significant challenges of using LLM is the issues with interpretability and transparency. Since large language models are complex neural networks with billions of parameters, understanding why the model made a particular decision is often extremely difficult. This makes it harder to correct potential errors in the model’s operation.

Errors in Output Data

There is no guarantee that LLM will respond truthfully or maintain consistency in our labeling task. The more data, the higher the chance the model will start hallucinating (making mistakes, inventing new columns for the table, or user dialogues that never existed).

Need for Data Preparation and Quality Re-check

The more complete and quality your dataset before training, the better the result. If data is insufficient or contains missing values, the answer will not hit the mark. LLM cannot assess the quality of data: it is not trained to guess on which data your AI will train correctly and on which it will not.

Subsequent Work Optimization

After creating the model, it is crucial to ensure the convenient use of the solution by our customer. Integration of the model into existing business processes and systems is necessary.

A final observation: the advent of neural networks was preceded by extensive training on corpora (collections) of texts, from scientific articles to user flame on Reddit. However, due to companies' reluctance to disclose training sources, user apprehension is growing. Sometimes, they react. For instance, creating a large amount of vulnerable code and uploading it to GitHub inevitably leads to the neural network learning to write such code.

A Hybrid Approach for Secure Business Solutions: ChatGPT for Data Preparation and ValueXI for Everything Else

We chose ValueXI as the primary tool for developing our solution. This platform allowed us to assemble an analytical AI model in a manner similar to building a website on Tilda. Importantly, ValueXI is a GDPR-compliant tool, enabling us to work with user data, such as anonymizing it on this platform before sending it to ChatGPT for labeling.

Development plan:

- Anonymize data in ValueXI so ChatGPT doesn’t handle personal data, dates, brand names, etc.

- Manage the neural network using prompt engineering, prohibiting the model from generating unnecessary data and hallucinations.

- Ask the neural network to label the data for subsequent training, marking up topics, client questions, solutions offered by the customer support team, and client satisfaction level.

- Develop a classification to understand the tone of dialogues, and organize everything accordingly.

- We obtain data in a format suitable for building an AI model (a table with a defined number of filled columns).

- Verify the labeled data in ValueXI to ensure data quality: balance, correctness, and suitability for training.

- Perform vectorization, clustering, and cluster interpretation (machine learning operations).

- Visualize the results in graphs.

- Provide the client with the API of the developed model for further use (for instance, handling the integration into a client’s ITSM solution).

All actions were performed from the ValueXI interface, interacting with LLM: the platform integrates with ChatGPT (and LLaMA) via API. This allowed us to focus the broad capabilities of LLM on solving the client's specific tasks.

As a result, we developed an MVP model for analyzing dialogues. Retrospectively, the model helped identify the most frequent questions (and add them to the FAQ), assess current customer satisfaction with support interactions, and evaluate the effectiveness of support staff. In the future, the model can be used to create chatbots to assist support and other tasks.

Interim Conclusion: The Most and Least Secure Solutions

A question arises: what if security isn’t a top priority for me? And conversely, what if my company cannot afford to transfer data to ChatGPT, even in anonymized form, due to high-security requirements?

Indeed, some queries can be sent to ChatGPT directly. For instance, if you want to quickly and effortlessly test a hypothesis and compliance is not a concern. Unfortunately, interaction via browser (or even API) means you are literally giving your data to another business. In return, you solve the task at hand.

The most secure option is purchasing server equipment, allowing the company to host an open-source LLM (like LLaMA) within a closed circuit. Data will go into the neural network but will not be accessible to external agents.

The difference lies in the costs, but the risk of data leaks is reduced to zero.

Conclusion and Practical Insights

Creating an MVP for a business application with artificial intelligence within a month, utilizing large language models, is entirely feasible. You can even kickstart the project by testing a hypothesis in ChatGPT, balancing between data anonymization or fully securing data within the company. However, in our view, having an experienced team is the key to navigating all potential risks and saving resources.

The ValueXI platform for AI-related tasks can be utilized in various ways:

- Enhance your product with the power of large language models (LLM) by securely accessing them through the ValueXI interface.

- Offload your machine learning specialists by automating data tasks.

- Use the platform's algorithms without resorting to LLM.

However, the best results are achieved with a team. We have extensive experience working with LLM, prompt engineering, developing AI solutions from scratch, and managing projects from concept to full implementation.

Artificial intelligence can be developed in various ways: from scratch or using LLM. With the ValueXI platform and our skilled team, this process becomes much simpler. You can not only launch your AI but also fully leverage the potential of LLM to address your business challenges.

For more information on application scenarios and business cases of the ValueXI platform for AI development — contact us directly at [email protected]

Accelerate AI services integration X3 fast and X5 cheaper with ValueXI

Request a demoYou may also like

Why AI is a must-have for leasing companies

Explore how AI can help leasing businesses solve key challenges and why adopting new technologies is essential for success in this sector.

December 19, 2024

Workshops as the first step towards mastering AI

In the complex field of AI, identifying the best approach and securely launching an AI project can often be more challenging than selecting the right tools. To help bridge this gap, we recommend expert-led training sessions that connect theoretical knowledge with practical application, guiding you through the complexities of AI implementation.

November 7, 2024

RAG-enabled intelligent knowledge base search on ValueXI

The RAG (Retrieval Augmented Generation) feature is now available for ValueXI users enabling them to obtain accurate and relevant responses to standard queries from any extensive internal knowledge base.

October 3, 2024