RAG-enabled intelligent knowledge base search on ValueXI

The RAG (Retrieval Augmented Generation) feature is now available for ValueXI users enabling them to obtain accurate and relevant responses to standard queries from any extensive internal knowledge base.

October 3, 2024

The ValueXI platform now offers businesses the capability to conduct intelligent searches through internal knowledge bases using the Retrieval Augmented Generation (RAG). This advanced technique merges AI’s generative capabilities with real-time searches of pertinent information sources. As a result, users receive reliable and precise responses to standard queries from extensive internal knowledge bases filled with complex documentation such as guidelines and regulations, which are typically challenging to navigate.

The integration with unique and trustworthy information sources exclusive to the organization enables the AI model to depend on actual data, significantly reducing the likelihood of fictitious answers and minimizing the risk of errors. Such an approach not only decreases the time required for data analysis but also reduces errors, cuts costs, and enhances communication efficiency.

ValueXI acts as a comprehensive tool for RAG searches, offering functionalities that include:

- Storing a knowledge base uploaded through an interface or integrated via API

- Converting files into a searchable format

- Searching for and processing relevant information through an AI model

- Generating responses via a chatbot

To interpret the information retrieved, the RAG module in ValueXI employs Large Language Models like ChatGPT or LlaMA. For organizations managing sensitive data, there is an option to deploy AI models on-premise.

RAG-enabled search markedly enhances the operations of support services, contact centers, and HR departments. We observe notable business interest in this functionality, especially from sectors managing vast information volumes, such as government institutions, manufacturing, legal firms, pharmaceuticals, fintech, and construction. RAG search is also sought after by organizations experiencing high staff turnover or those actively increasing their workforce, and by large companies with developed customer support services

Stanislav Appelganz

Head of Business Development at WaveAccess Germany

Moreover, the RAG module can be configured to autonomously connect to external, continuously updated corporate systems, enabling automatic updates of the knowledge base with new entries. It can also be adapted to function within specialized fields requiring deep contextual understanding.

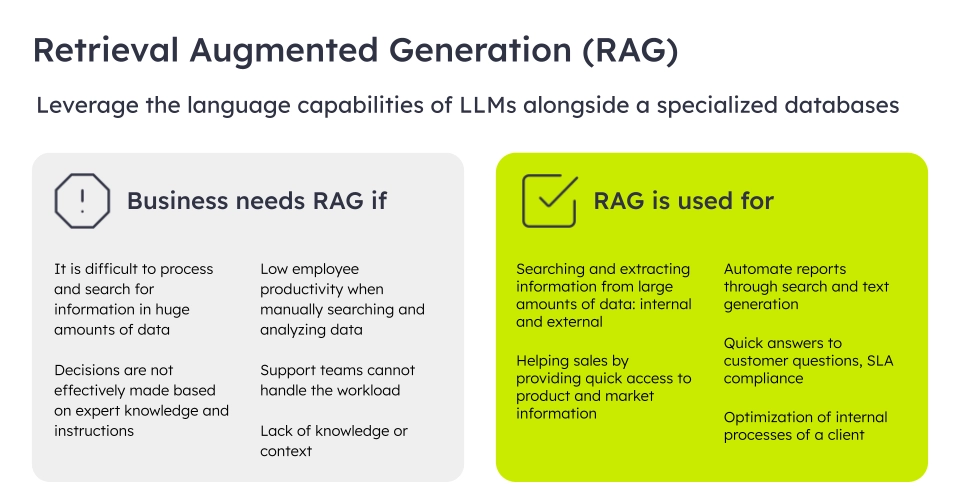

What businesses need RAG search for

RAG search technology is now a part of ValueXI AI Engine, a platform for developing and deploying AI models to businesses in the cloud or on-premises. The solution enables to leverage LLM capabilities in any business data related problem or processing data tasks, process more data faster, and extract more value from data to drive business growth. Here are the main practices what businesses need RAG for:

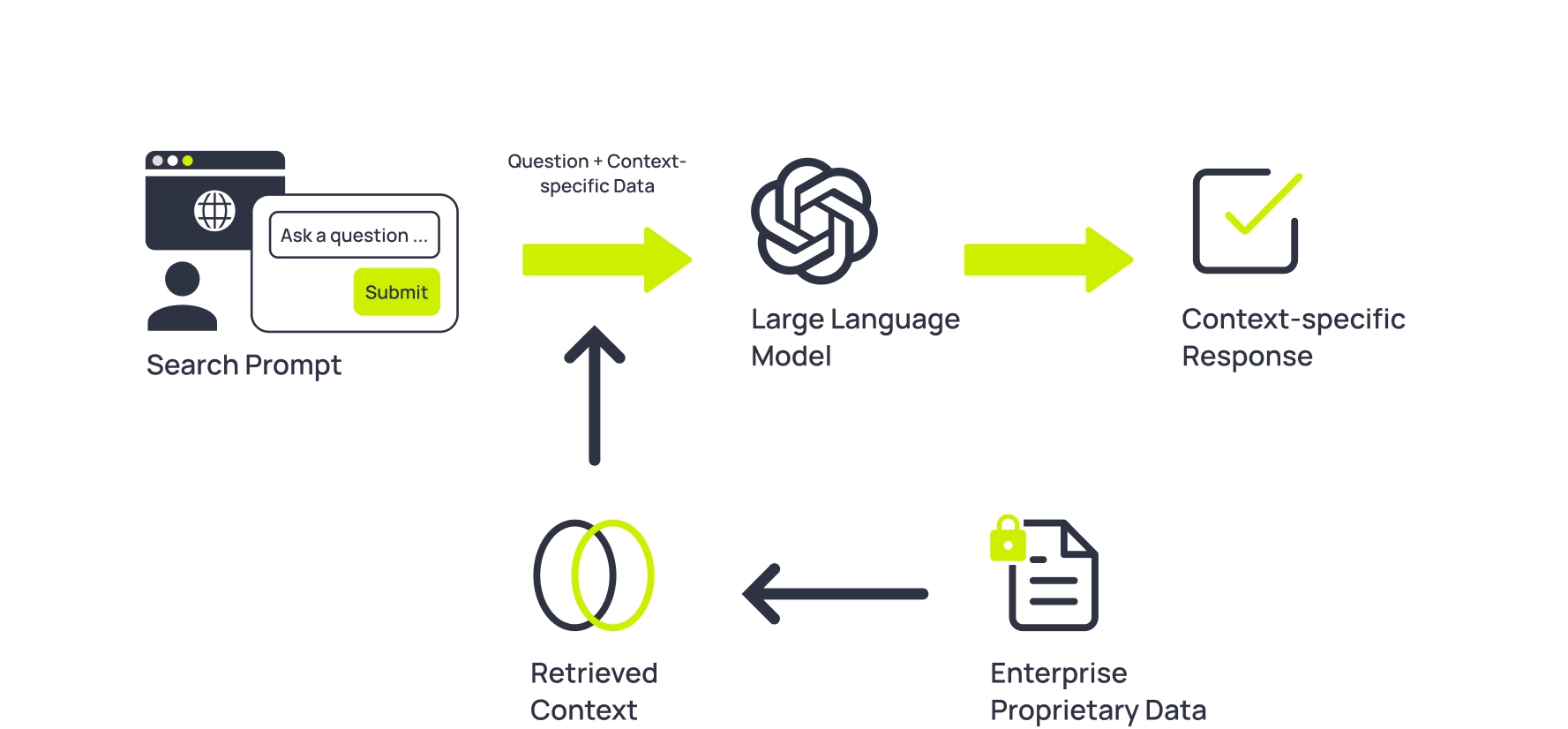

How RAG works with your data

We offer businesses a new principle of searching internal knowledge bases with RAG technology. With RAG, the user writes a question into a chat room and the RAG assistant uses the LLM to provide an accurate and relevant answer on the right database:

- Recognizes the context of the question

- Finds documents in your knowledge base for that context

- Constrains the LLM answer to the context and documents

- Transmits a hallucination-free answer to the user

How RAG works

RAG search can make different business processes 15-50% more efficient

- Optimize tech support through fast and accurate responses

- Improved SLA: 40-50% reduction in request processing time

- Reduce costs by 20-40% by automating responses

- Improve CSAT scores by 40% on average

- Accelerate decision-making based on up-to-date information

- Increase productivity by 25-40% by speeding up searches

- Reduce errors by 25% with employee access to up-to-date versions of instructions, scripts, knowledge bases, and rules

- Reduce employee fines by 10-25%

- Improve HR processes, especially for mass hiring

- Save 10-20% of the budget on staff training

- Remove some of the administrative burden from HR by up to 25%

- Simplify integration of newcomers by 20-45% and reduce turnover

- Increase sales effectiveness by 10-30%

- Accelerate sales: sales manager answers customer questions on the fly, without waiting for information from a busy specialist

- Increase sales from the website: an improved version of the chatbot on the website helps the user to make the right choice

Apply LLM and RAG search to your knowledge bases securely with the ValueXI platform

Relevance: possible application in narrow industries where the LLM does not have the knowledge or there are no available specialists for pre-training.

Data protection: more secure than cloud-based LLM solutions due to the ability to upload both the solution and the LLM itself into the loop.

Accuracy of answers: by fine-tuned settings of the context for generation, RAG reduces LLM hallucinations.

Support: for your expertise from validation and knowledge base preparation to loading the model to your service and model tuning. It will also reduce data application errors.

Contact us today, let's discuss next steps together! [email protected]

Accelerate AI services integration X3 fast and X5 cheaper with ValueXI

Request a demoYou may also like

Corporate AI with ready-made pipelines

Discover how ValueXI’s pre-configured AI solutions are automating and optimizing workflows across business units — from Sales and Finance to HR and Support.

January 20, 2025

Leveraging ChatGPT and LLaMA capabilities with ValueXI

ValueXI has introduced a new feature — support for ChatGPT and LLaMA, enabling businesses to fully utilize the potential of Large Language Models (LLMs) to address complex business and data management challenges.

September 24, 2024

Applied LLM: Business areas benefiting from ML + LLM synergy

A powerful combination of Machine Learning and Large Language Models brings a new dimension to handling domain-specific tasks across industries. Discover how businesses use this synergy to transform their operations and how ValueXI can assist.

September 9, 2024